7.5. QR factorization#

Square matrix \(A\) can be decomposed as \(A=QR\) where \(Q\) is an orthogonal matrix (\(Q^{*}Q=QQ^{*}=I\)) and \(R\) is an upper triangular matrix. If \(A\) is invertible and \(R\) is positive, then the factorization is unique.

Furthermore, there are several methods for computing the \(QR\) decomposition such as the Gram–Schmidt process, and the Householder transformations, … Here, we use the Gram–Schmidt process.

Consider matrix \(A = \begin{bmatrix}| & | & & | \\ \vec{a}_1 & \vec{a}_2 & \dots & \vec{a}_n \\ | & | & & | \end{bmatrix}\) were \( \vec{a}_i\) are the columns of the full column rank matrix \(A\). Now, define the projection as follows:

Therefore,

Now, \(\vec{a}_{i}\) can be expressed as

where \(\left\langle \vec{e}_{i},\vec{a}_{i}\right\rangle =\left\|\vec{u}_{i}\right\|\). This can be written in matrix form:

See [Allaire, Kaber, Trabelsi, and Allaire, 2008, Khoury and Harder, 2016] for the full derivation of this algorithm.

import numpy as np

import pandas as pd

def myQR(A):

'''

Square matrix $A$ can be decomposed as A=QR

where Q is an orthogonal matrix and

R is an upper triangular matrix.

Parameters

----------

A : numpy array

DESCRIPTION. Matrix A

Returns

-------

Q : numpy array

DESCRIPTION. Matrix Q from A = QR

R : numpy array

DESCRIPTION. Matrix R from A = QR

'''

n = A.shape[0]

Q = np.zeros([n,n], dtype = float)

R = np.zeros([n,n], dtype = float)

A = A.astype(float)

for j in range(n):

T = A[:, j]

for i in range(j):

R[i, j] = np.dot(Q[:, i], A[:, j])

T -= R[i, j] * Q[:, i]

R[j, j] = np.sqrt(np.dot(T, T))

Q[:, j] = T /R[j, j]

del T

return Q, R

function [Q, R] = myQR(A)

%{

Square matrix $A$ can be decomposed as A=QR

where Q is an orthogonal matrix and

R is an upper triangular matrix.

Parameters

----------

A : numpy array

DESCRIPTION. Matrix A

Returns

-------

Q : numpy array

DESCRIPTION. Matrix Q from A = QR

R : numpy array

DESCRIPTION. Matrix R from A = QR

%}

n=length(A);

Q =zeros(n,n);

R = zeros(n,n);

for j=1:n

T = A(:, j);

for i=1:j

R(i, j) = dot(Q(:, i), A(:, j));

T = T - R(i, j) * Q(:, i);

end

R(j, j) = sqrt(dot(T, T));

Q(:, j) = T /R(j, j);

end

Example: Apply QR decomposition on the following matrix and identify \(Q\) and \(R\).

Solution: We have,

import numpy as np

from hd_Matrix_Decomposition import myQR

from IPython.display import display, Latex

from sympy import init_session, init_printing, Matrix

A = np.array([ [7, 3, -1, 2], [3, 8, 1, -4], [-1, 1, 4, -1], [2, -4, -1, 6] ])

Q, R = myQR(A)

display(Latex(r'Q ='), Matrix(np.round(Q, 2)))

display(Latex(r'R ='), Matrix(np.round(R, 2)))

display(Latex(r'QR ='), Matrix(np.round(Q@R, 2)))

display(Latex(r'QQ^T ='), Matrix(np.round(Q@(Q.T), 2)))

display(Latex(r'Q^TQ ='), Matrix(np.round((Q.T)@Q, 2)))

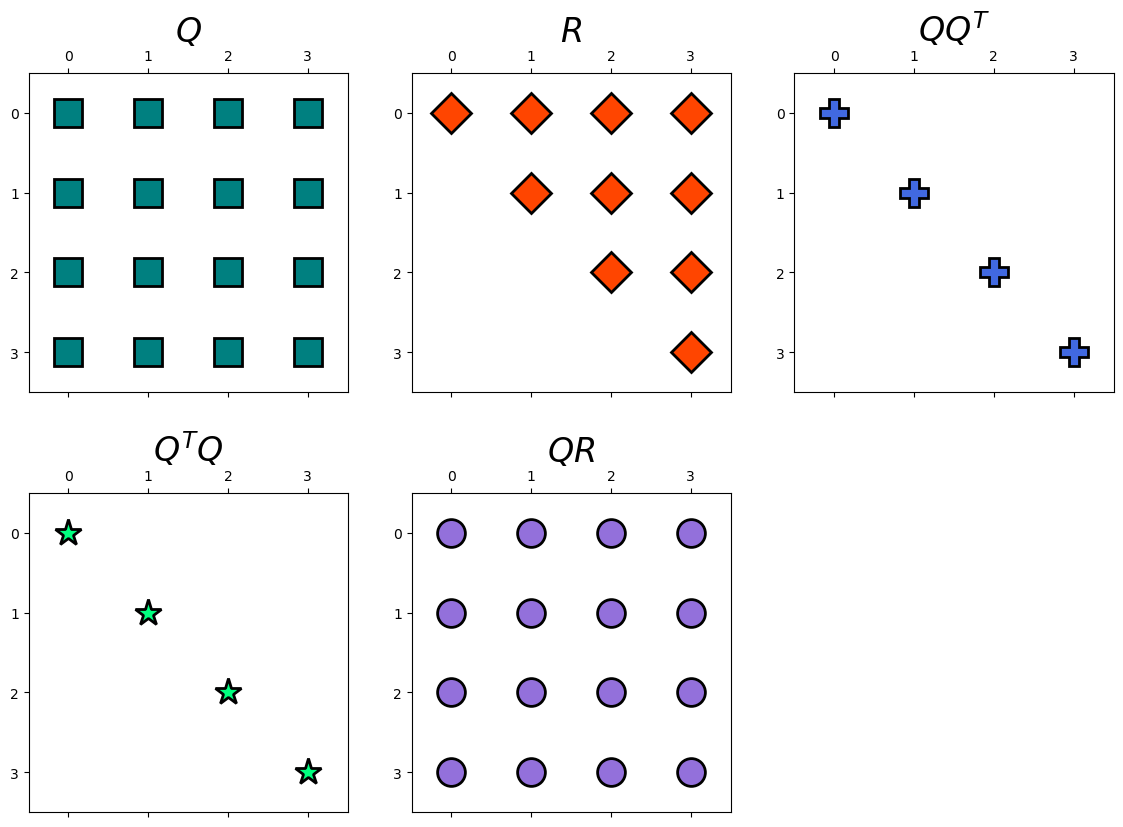

hd.matrix_decomp_fig(mats = [Q, R, np.round(Q@(Q.T), 1), np.round((Q.T)@Q, 1), np.round(np.matmul(Q, R), 2)],

labels = ['$Q$', '$R$', '$QQ^T$', '$Q^TQ$', '$QR$'],

nrows=2, ncols=3, figsize=(14, 10))

Note that we could get a similar results using function, np.linalg.qr.

import scipy.linalg as linalg

Q, R = linalg.qr(A)

display(Latex(r'Q ='), Matrix(np.round(Q, 2)))

display(Latex(r'R ='), Matrix(np.round(R, 2)))

display(Latex(r'QR ='), Matrix(np.round(Q@R, 2)))

display(Latex(r'QQ^T ='), Matrix(np.round(Q@(Q.T), 2)))

display(Latex(r'Q^TQ ='), Matrix(np.round((Q.T)@Q, 2)))

7.5.1. Solving Linear systems using QR factorization#

We can solve the linear system \(Ax=b\) for \(x\) using QR factorization. To demonstrate this, we use the following example,

Example: Solve the following linear system using QR decomposition.

Solution:

Let \(A=\left[\begin{array}{cccc} 7 & 3 & -1 & 2\\ 3 & 8 & 1 & -4\\ -1 & 1 & 4 & -1\\ 2 & -4 & -1 & 6 \end{array}\right]\) and \(b=\left[\begin{array}{c} 18\\ 6\\ 9\\ 15 \end{array}\right]\). Then, this linear system can be also expressed as follows,

We have,

A = np.array([ [7, 3, -1, 2], [3, 8, 1, -4], [-1, 1, 4, -1], [2, -4, -1, 6] ])

b = np.array([[18],[ 6],[ 9],[15]])

Q, R = myQR(A)

display(Latex(r'Q ='), Matrix(np.round(Q, 2)))

display(Latex(r'R ='), Matrix(np.round(R, 2)))

Now we can solve the following linear systems instead

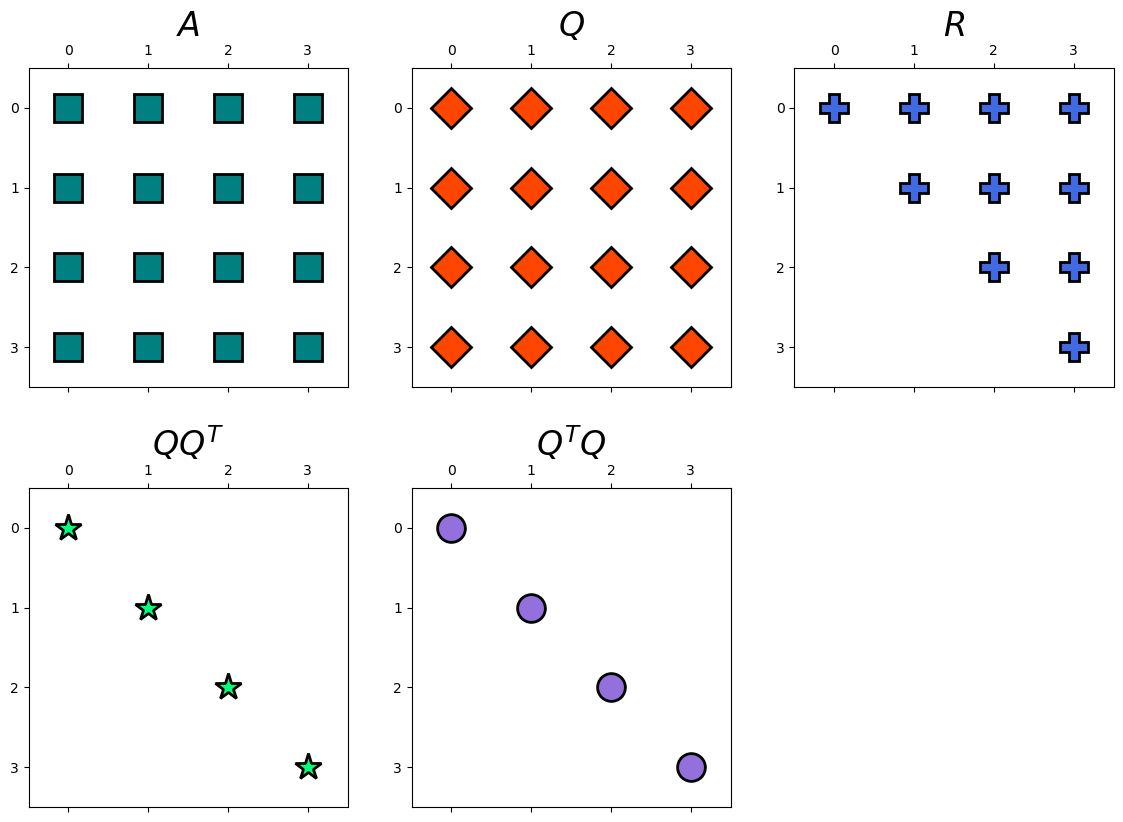

hd.matrix_decomp_fig(mats = [A, Q, R, np.round(Q@(Q.T), 1), np.round((Q.T)@Q, 1)],

labels = ['$A$', '$Q$', '$R$', '$QQ^T$', '$Q^TQ$'],

nrows=2, ncols=3, figsize=(14, 10))

# solving y

y = np.linalg.solve(Q, b)

display(Latex(r'y ='), Matrix(np.round(y, 2)))

# solving Ux=y for x

x = np.linalg.solve(R, y)

display(Latex(r'x ='), Matrix(np.round(x, 2)))

Let’s now solve the linear system directly and compare the results.

x_new = linalg.solve(A, b)

display(Latex(r'x ='), Matrix(np.round(x_new, 2)))