12.5. Tensors in Various Operations (Ops)#

Tensors are the fundamental building blocks of TensorFlow, and they can be manipulated by a wide range of operations, commonly abbreviated as “Ops.” These operations cover essential tasks such as computation, transformation, and manipulation of data [TensorFlow Developers, 2023]. The following are some of the main categories of operations in TensorFlow:

Math Operations:

These operations perform basic arithmetic, algebraic, trigonometric, and other mathematical functions on tensors.

Example:

tf.add,tf.subtract,tf.multiply,tf.divide, etc.

Array Operations:

These operations change the shape, size, order, and content of tensors in various ways, such as reshaping, slicing, stacking, concatenating, splitting, and indexing.

Example:

tf.reshape,tf.concat,tf.slice,tf.gather, etc.

String Operations:

These operations handle string tensors (

tf.string), and perform tasks such as splitting, joining, length, and conversion to numbers.Example:

tf.strings.split,tf.strings.join,tf.strings.length,tf.strings.to_number, etc.

Control Flow Operations:

These operations enable conditional execution and looping of tensors, using constructs such as

if,while, andcase.Example:

tf.cond,tf.while_loop,tf.case, etc.

Reduction Operations:

These operations reduce tensors along specified dimensions, by applying functions such as sum, mean, max, min, etc.

Example:

tf.reduce_sum,tf.reduce_mean,tf.reduce_max,tf.reduce_min, etc.

Sparse Operations:

These operations are designed for sparse tensors, which store tensors with many zeros efficiently by only keeping the non-zero values and their indices.

Example:

tf.sparse.reduce_sum,tf.sparse.to_dense, etc.

Neural Network Operations:

These operations are specifically tailored for building and training neural networks, and include activation functions, loss functions, optimizers, and layers.

Example:

tf.nn.relu,tf.nn.softmax,tf.keras.layers.Dense, etc.

Variable Operations:

These operations deal with the creation, modification, and management of variables, which are mutable tensors that can store and update values.

Example:

tf.Variable,tf.assign,tf.assign_add,tf.train.Optimizer, etc.

I/O Operations:

These operations facilitate the reading and writing of data from various sources, such as files, queues, or networks.

Example:

tf.io.read_file,tf.io.decode_image,tf.io.write_file, etc.

Image and Signal Processing Operations:

These operations are specific to image and signal processing tasks, and include functions for resizing, cropping, filtering, and transforming images and signals.

Example:

tf.image.resize,tf.image.crop_and_resize,tf.signal.fft, etc.

These categories provide a comprehensive overview of the operations available in TensorFlow, which can be used for different purposes and applications involving data manipulation, mathematical computations, and machine learning.

Note

To access the full list of symbols in TensorFlow 2, you can visit the [TensorFlow API documentation] and browse through the categories and modules. Alternatively, you can use the search bar to find a specific symbol by name or keyword.

12.5.1. Math Operations#

Math operations are a subset of TensorFlow operations that perform basic arithmetic, algebraic, trigonometric, and other mathematical functions on tensors. Math operations can be useful for various purposes and applications involving numerical computations and analysis.

Some of the common math operations are [TensorFlow Developers, 2023]:

Arithmetic Operations: These operations perform element-wise addition, subtraction, multiplication, and division of tensors, as well as other arithmetic functions such as power, modulus, and sign.

Example:

tf.add,tf.subtract,tf.multiply, andtf.divideperform element-wise addition, subtraction, multiplication, and division of tensors, respectively.tf.pow,tf.mod, andtf.signperform element-wise power, modulus, and sign of tensors, respectively.Code:

import tensorflow as tf

# Perform element-wise addition of two tensors

x = tf.constant([1, 2, 3])

y = tf.constant([4, 5, 6])

tf.add(x, y)

# <tf.Tensor: shape=(3,), dtype=int32, numpy=array([5, 7, 9], dtype=int32)>

# Perform element-wise power of a tensor

z = tf.constant([2, 3, 4])

tf.pow(z, 2)

# <tf.Tensor: shape=(3,), dtype=int32, numpy=array([ 4, 9, 16], dtype=int32)>

<tf.Tensor: shape=(3,), dtype=int32, numpy=array([ 4, 9, 16])>

Linear Algebra Operations: These operations perform matrix and vector operations, such as dot product, matrix multiplication, transpose, determinant, inverse, and decomposition.

Example:

tf.linalg.matmulperforms matrix multiplication of two tensors.tf.linalg.invcomputes the inverse of a square matrix tensor.tf.linalg.eighcomputes the eigenvalues and eigenvectors of a symmetric matrix tensor.Code:

import tensorflow as tf

def print_bold(txt, c=31):

"""

Display text in bold with optional color.

Parameters:

- txt (str): The text to be displayed.

- c (int): Color code for the text (default is 31 for red).

"""

print(f"\033[1;{c}m" + txt + "\033[0m")

# Perform matrix multiplication of two tensors

a = tf.constant([[1, 2], [3, 4]])

b = tf.constant([[5, 6], [7, 8]])

result_ab = tf.linalg.matmul(a, b)

print_bold('Matrix multiplication of two tensors:')

print(result_ab)

# Compute the inverse of a square matrix tensor

c = tf.constant([[1, 2], [3, 4]], dtype=tf.float32)

inv_c = tf.linalg.inv(c)

print_bold('\nThe inverse of a square matrix tensor:')

print(inv_c)

# Compute the eigenvalues and eigenvectors of a symmetric matrix tensor

d = tf.constant([[1, 2], [2, 3]], dtype=tf.float32)

eigvals_d, eigvecs_d = tf.linalg.eigh(d)

print_bold('\nThe eigenvalues:')

print(eigvals_d)

print_bold('\nThe eigenvectors:')

print(eigvecs_d)

Matrix multiplication of two tensors:

tf.Tensor(

[[19 22]

[43 50]], shape=(2, 2), dtype=int32)

The inverse of a square matrix tensor:

tf.Tensor(

[[-2.0000002 1.0000001 ]

[ 1.5000001 -0.50000006]], shape=(2, 2), dtype=float32)

The eigenvalues:

tf.Tensor([-0.23606804 4.2360687 ], shape=(2,), dtype=float32)

The eigenvectors:

tf.Tensor(

[[-0.85065085 -0.52573115]

[ 0.52573115 -0.85065085]], shape=(2, 2), dtype=float32)

Trigonometric and Hyperbolic Operations: These operations perform trigonometric and hyperbolic functions on tensors, such as sine, cosine, tangent, arcsine, arccosine, arctangent, sinh, cosh, tanh, etc.

Example:

tf.sin,tf.cos, andtf.tanperform element-wise sine, cosine, and tangent of tensors, respectively.tf.asin,tf.acos, andtf.atanperform element-wise arcsine, arccosine, and arctangent of tensors, respectively.tf.sinh,tf.cosh, andtf.tanhperform element-wise hyperbolic sine, cosine, and tangent of tensors, respectively.Code:

import tensorflow as tf

import numpy as np

def print_bold(txt, c=31):

"""

Display text in bold with optional color.

Parameters:

- txt (str): The text to be displayed.

- c (int): Color code for the text (default is 31 for red).

"""

print(f"\033[1;{c}m" + txt + "\033[0m")

# Perform element-wise sine of a tensor

x = tf.constant([0, np.pi/2, np.pi])

sin_x = tf.sin(x)

print_bold('Element-wise sine of a tensor:')

print(sin_x)

# Perform element-wise arctangent of a tensor

y = tf.constant([-1, 0, 1], dtype='float')

atan_y = tf.atan(y)

print_bold('\nElement-wise arctangent of a tensor:')

print(atan_y)

# Perform element-wise hyperbolic tangent of a tensor

z = tf.constant([-1, 0, 1], dtype='float')

tanh_z = tf.tanh(z)

print_bold('\nElement-wise hyperbolic tangent of a tensor:')

print(tanh_z)

Element-wise sine of a tensor:

tf.Tensor([ 0.000000e+00 1.000000e+00 -8.742278e-08], shape=(3,), dtype=float32)

Element-wise arctangent of a tensor:

tf.Tensor([-0.7853982 0. 0.7853982], shape=(3,), dtype=float32)

Element-wise hyperbolic tangent of a tensor:

tf.Tensor([-0.7615942 0. 0.7615942], shape=(3,), dtype=float32)

These are some examples of Math Operations in TensorFlow, which can be used for various purposes and applications involving data manipulation, mathematical computations, and machine learning. For more information and examples, you can refer to the TensorFlow documentation.

Note

There are many more operations that you can use to perform various mathematical computations and transformations on tensors. You can find the complete list of all the math operations in the TensorFlow API documentation or the TensorFlow math module. You can also use the tf.keras.backend module to access some additional math operations that are compatible with different backends, such as TensorFlow, Theano, or CNTK. You can find more details and examples of using tf.keras.backend here.

12.5.2. Array Operations#

Array operations are a subset of TensorFlow operations that change the shape, size, order, and content of tensors in various ways, such as reshaping, slicing, stacking, concatenating, splitting, and indexing. Array operations can be useful for various purposes and applications involving data manipulation and transformation.

Some of the common array operations are [TensorFlow Developers, 2023]:

Reshaping: These operations change the shape of a tensor without changing its underlying data. They can be useful for changing the dimensionality or layout of a tensor.

Example:

tf.reshapechanges the shape of a tensor to a specified shape.tf.expand_dimsandtf.squeezeadd or remove dimensions of size 1 from a tensor, respectively.Code:

import tensorflow as tf

# Change the shape of a tensor to a specified shape

x = tf.constant([1, 2, 3, 4])

reshaped_x = tf.reshape(x, shape=(2, 2))

print_bold('Change the shape of a tensor to a specified shape:')

print(reshaped_x)

# Add a dimension of size 1 to a tensor

y = tf.constant([1, 2, 3])

expanded_y = tf.expand_dims(y, axis=0)

print_bold('\nAdd a dimension of size 1 to a tensor:')

print(expanded_y)

# Remove dimensions of size 1 from a tensor

z = tf.constant([[1], [2], [3]])

squeezed_z = tf.squeeze(z, axis=1)

print_bold('\nRemove dimensions of size 1 from a tensor:')

print(squeezed_z)

Change the shape of a tensor to a specified shape:

tf.Tensor(

[[1 2]

[3 4]], shape=(2, 2), dtype=int32)

Add a dimension of size 1 to a tensor:

tf.Tensor([[1 2 3]], shape=(1, 3), dtype=int32)

Remove dimensions of size 1 from a tensor:

tf.Tensor([1 2 3], shape=(3,), dtype=int32)

Slicing and Indexing: These operations extract or modify a subset of a tensor based on indices or ranges. They can be useful for accessing or updating specific elements or regions of a tensor.

Example:

tf.sliceextracts a slice of a tensor based on a starting position and a size.tf.gathergathers elements from a tensor based on indices.tf.scatter_ndscatters values into a new tensor based on indices.Code:

import tensorflow as tf

# Extract a slice of a tensor based on a starting position and a size

x = tf.constant([[1, 2, 3], [4, 5, 6], [7, 8, 9]])

sliced_x = tf.slice(x, begin=[0, 1], size=[2, 2])

print_bold('Extract a slice of a tensor based on a starting position and a size:')

print(sliced_x)

# Gather elements from a tensor based on indices

y = tf.constant([10, 20, 30, 40, 50])

gathered_y = tf.gather(y, indices=[0, 2, 4])

print_bold('\nGather elements from a tensor based on indices:')

print(gathered_y)

# Scatter values into a new tensor based on indices

z = tf.constant([1, 2, 3])

scattered_z = tf.scatter_nd(indices=[[0], [2], [3]], updates=z, shape=[4])

print_bold('\nScatter values into a new tensor based on indices:')

print(scattered_z)

Extract a slice of a tensor based on a starting position and a size:

tf.Tensor(

[[2 3]

[5 6]], shape=(2, 2), dtype=int32)

Gather elements from a tensor based on indices:

tf.Tensor([10 30 50], shape=(3,), dtype=int32)

Scatter values into a new tensor based on indices:

tf.Tensor([1 0 2 3], shape=(4,), dtype=int32)

Stacking and Concatenating: These operations combine multiple tensors along a specified axis. They can be useful for merging or expanding tensors.

Example:

tf.stackstacks a list of tensors along a new axis.tf.concatconcatenates a list of tensors along an existing axis.Code:

import tensorflow as tf

# Stack a list of tensors along a new axis

x = tf.constant([1, 2, 3])

y = tf.constant([4, 5, 6])

stacked_tensors = tf.stack([x, y], axis=0)

print_bold('Stack a list of tensors along a new axis:')

print(stacked_tensors)

# Concatenate a list of tensors along an existing axis

z = tf.constant([7, 8, 9])

concatenated_tensors = tf.concat([x, y, z], axis=0)

print_bold('\nConcatenate a list of tensors along an existing axis:')

print(concatenated_tensors)

Stack a list of tensors along a new axis:

tf.Tensor(

[[1 2 3]

[4 5 6]], shape=(2, 3), dtype=int32)

Concatenate a list of tensors along an existing axis:

tf.Tensor([1 2 3 4 5 6 7 8 9], shape=(9,), dtype=int32)

Splitting and Unstacking: These operations divide a tensor into multiple tensors along a specified axis. They can be useful for splitting or reducing tensors.

Example:

tf.splitsplits a tensor into a list of tensors of equal size along a specified axis.tf.unstackunstacks a tensor along a specified axis and returns a list of tensors.Code:

import tensorflow as tf

# Split a tensor into a list of tensors of equal size along a specified axis

x = tf.constant([[1, 2, 3], [4, 5, 6], [7, 8, 9]])

split_tensors = tf.split(x, num_or_size_splits=3, axis=0)

print_bold('Split a tensor into a list of tensors of equal size along a specified axis:')

print(split_tensors)

# Unstack a tensor along a specified axis and return a list of tensors

y = tf.constant([[1, 2, 3], [4, 5, 6]])

unstacked_tensors = tf.unstack(y, axis=1)

print_bold('\nUnstack a tensor along a specified axis and return a list of tensors:')

print(unstacked_tensors)

Split a tensor into a list of tensors of equal size along a specified axis:

[<tf.Tensor: shape=(1, 3), dtype=int32, numpy=array([[1, 2, 3]])>, <tf.Tensor: shape=(1, 3), dtype=int32, numpy=array([[4, 5, 6]])>, <tf.Tensor: shape=(1, 3), dtype=int32, numpy=array([[7, 8, 9]])>]

Unstack a tensor along a specified axis and return a list of tensors:

[<tf.Tensor: shape=(2,), dtype=int32, numpy=array([1, 4])>, <tf.Tensor: shape=(2,), dtype=int32, numpy=array([2, 5])>, <tf.Tensor: shape=(2,), dtype=int32, numpy=array([3, 6])>]

These are some examples of Array Operations in TensorFlow, which can be used for various purposes and applications involving data manipulation, transformation, and reshaping. For more information and examples, you can refer to the TensorFlow documentation.

12.5.3. String Operations#

String operations are a subset of array operations that handle string tensors (tf.string). A string tensor is a tensor of type tf.string that can store byte strings of arbitrary lengths. String tensors can be scalars, vectors, or higher-rank tensors, and they can be created from Python strings or byte literals [TensorFlow Developers, 2023].

Some of the common string operations are [TensorFlow Developers, 2023]:

Splitting and Joining: These operations split a string tensor into a list of substrings, or join a list of string tensors into a single string tensor. They can be useful for tokenizing, parsing, or concatenating strings.

Example:

tf.strings.splitsplits a string tensor into a ragged tensor of substrings, based on a delimiter.tf.strings.joinjoins a list of string tensors into a single string tensor, with an optional separator.Code:

import tensorflow as tf

# Split a string tensor by whitespace

s = tf.constant("Hello Calgary!")

split_strings = tf.strings.split(s, sep=" ")

print_bold('Split a string tensor by whitespace:')

print(split_strings)

# Join a list of string tensors with a comma

t = tf.constant(["Red", "Green", "Blue"])

joined_strings = tf.strings.join(t)

print_bold('\nJoin a list of string tensors with a comma:')

print(joined_strings)

Split a string tensor by whitespace:

tf.Tensor([b'Hello' b'Calgary!'], shape=(2,), dtype=string)

Join a list of string tensors with a comma:

tf.Tensor(b'RedGreenBlue', shape=(), dtype=string)

Length and Hashing: These operations compute the length or the hash value of a string tensor. They can be useful for filtering, sorting, or hashing strings.

Example:

tf.strings.lengthreturns the length of each string in a tensor, in bytes or Unicode characters.tf.strings.to_hash_bucketassigns each string in a tensor to a hash bucket, based on its hash value.Code:

import tensorflow as tf

# Get the length of each string in bytes

s = tf.constant(["Hello", "こんにちは", "你好"])

length_bytes = tf.strings.length(s, unit="BYTE")

print_bold('Get the length of each string in bytes:')

print(length_bytes)

# Get the length of each string in Unicode characters

length_unicode = tf.strings.length(s, unit="UTF8_CHAR")

print_bold('\nGet the length of each string in Unicode characters:')

print(length_unicode)

# Assign each string to a hash bucket

hash_buckets = tf.strings.to_hash_bucket(s, num_buckets=10)

print_bold('\nAssign each string to a hash bucket:')

print(hash_buckets)

Get the length of each string in bytes:

tf.Tensor([ 5 15 6], shape=(3,), dtype=int32)

Get the length of each string in Unicode characters:

tf.Tensor([5 5 2], shape=(3,), dtype=int32)

Assign each string to a hash bucket:

tf.Tensor([1 8 3], shape=(3,), dtype=int64)

Conversion and Encoding: These operations convert a string tensor to a different type or format, such as numbers, booleans, or base64. They can be useful for parsing, encoding, or decoding strings.

Example:

tf.strings.to_numberconverts each string in a tensor to a numeric tensor of a specified dtype.tf.io.encode_base64encodes each string in a tensor to base64 format.Code:

import tensorflow as tf

# Convert each string to a float tensor

s = tf.constant(["1.0", "3.14", "2.718"])

float_tensors = tf.strings.to_number(s, out_type=tf.float32)

print_bold('Convert each string to a float tensor:')

print(float_tensors)

# Encode each string to base64

t = tf.constant(["Hello", "world"])

base64_encoded = tf.io.encode_base64(t)

print_bold('\nEncode each string to base64:')

print(base64_encoded)

Convert each string to a float tensor:

tf.Tensor([1. 3.14 2.718], shape=(3,), dtype=float32)

Encode each string to base64:

tf.Tensor([b'SGVsbG8' b'd29ybGQ'], shape=(2,), dtype=string)

These are some of the string operations available in TensorFlow, which can be used for different purposes and applications involving string manipulation and processing. For more information, please refer to the TensorFlow documentation.

12.5.4. Control Flow Operations#

Control flow operations are a subset of TensorFlow operations that enable conditional execution and looping of tensors, using constructs such as if, while, and case. Control flow operations can be useful for various purposes and applications involving dynamic and complex logic and computation.

Some of the common control flow operations are [TensorFlow Developers, 2023]:

Conditional Execution: These operations execute a branch of tensors based on a condition. They can be useful for implementing branching logic or decision making.

Example:

tf.condexecutes one of two tensors based on a boolean predicate.tf.wherereturns elements from one of two tensors based on a boolean mask.Code:

import tensorflow as tf

# Execute one of two tensors based on a boolean predicate

x = tf.constant(1)

y = tf.constant(2)

result_cond = tf.cond(x < y, lambda: tf.add(x, y), lambda: tf.subtract(x, y))

print_bold('Execute one of two tensors based on a boolean predicate:')

print(result_cond)

# Return elements from one of two tensors based on a boolean mask

a = tf.constant([1, 2, 3])

b = tf.constant([4, 5, 6])

mask = tf.constant([True, False, True])

result_where = tf.where(mask, a, b)

print_bold('\nReturn elements from one of two tensors based on a boolean mask:')

print(result_where)

Execute one of two tensors based on a boolean predicate:

tf.Tensor(3, shape=(), dtype=int32)

Return elements from one of two tensors based on a boolean mask:

tf.Tensor([1 5 3], shape=(3,), dtype=int32)

Looping: These operations execute a tensor or a list of tensors repeatedly until a condition is met. They can be useful for implementing iterative or recursive computation.

Example:

tf.while_loopexecutes a body tensor while a condition tensor is true.tf.map_fnapplies a function to each element of a tensor or a list of tensors.Code:

import tensorflow as tf

# Execute a body tensor while a condition tensor is true

i = tf.constant(0)

condition = lambda i: tf.less(i, 10)

body = lambda i: (tf.add(i, 1),)

result_while_loop = tf.while_loop(condition, body, [i])[0]

print_bold('Execute a body tensor while a condition tensor is true:')

print(result_while_loop)

# Apply a function to each element of a tensor or a list of tensors

x = tf.constant([1, 2, 3])

function_to_apply = lambda x: tf.square(x)

result_map_fn = tf.map_fn(function_to_apply, x)

print_bold('\nApply a function to each element of a tensor or a list of tensors:')

print(result_map_fn)

Execute a body tensor while a condition tensor is true:

tf.Tensor(10, shape=(), dtype=int32)

Apply a function to each element of a tensor or a list of tensors:

tf.Tensor([1 4 9], shape=(3,), dtype=int32)

Switching: These operations execute a tensor or a list of tensors based on a selector. They can be useful for implementing multiple choices or cases.

Example:

tf.switch_caseexecutes one of a list of tensors based on an integer selector.tf.caseexecutes one of a list of tensors based on a list of boolean predicates.Code:

import tensorflow as tf

# Execute one of a list of tensors based on an integer selector

x = tf.constant(2)

branches = [lambda: tf.constant(0), lambda: tf.constant(1), lambda: tf.constant(2)]

result_switch_case = tf.switch_case(x, branches)

print_bold('Execute one of a list of tensors based on an integer selector:')

print(result_switch_case)

# Execute one of a list of tensors based on a list of boolean predicates

y = tf.constant(3)

pred_fn_pairs = [(tf.equal(y, 1), lambda: tf.constant(1)),

(tf.equal(y, 2), lambda: tf.constant(2))]

default = lambda: tf.constant(0)

result_case = tf.case(pred_fn_pairs, default, exclusive=True)

print_bold('\nExecute one of a list of tensors based on a list of boolean predicates:')

print(result_case)

Execute one of a list of tensors based on an integer selector:

tf.Tensor(2, shape=(), dtype=int32)

Execute one of a list of tensors based on a list of boolean predicates:

tf.Tensor(0, shape=(), dtype=int32)

These are some of the control flow operations available in TensorFlow, which can be used for different purposes and applications involving dynamic and complex logic and computation. For more information, please refer to the TensorFlow documentation.

12.5.5. Reduction Operations#

Sure, I can expand on reduction operations. Reduction operations are a subset of TensorFlow operations that reduce tensors along specified dimensions, by applying functions such as sum, mean, max, min, etc. Reduction operations can be useful for various purposes and applications involving data aggregation and analysis.

Some of the common reduction operations are [TensorFlow Developers, 2023]:

Sum and Mean: These operations compute the sum or the mean of elements along a specified axis or across the entire tensor. They can be useful for calculating totals or averages of data.

Example:

tf.reduce_sumcomputes the sum of elements along a specified axis or across the entire tensor.tf.reduce_meancomputes the mean of elements along a specified axis or across the entire tensor.Code:

import tensorflow as tf

# Compute the sum of elements along a specified axis or across the entire tensor

x = tf.constant([[1, 2, 3], [4, 5, 6]])

sum_axis_0 = tf.reduce_sum(x, axis=0)

sum_total = tf.reduce_sum(x)

print_bold('Compute the sum of elements along a specified axis or across the entire tensor:')

print(sum_axis_0)

print(sum_total)

# Compute the mean of elements along a specified axis or across the entire tensor

y = tf.constant([[1, 2, 3], [4, 5, 6]], dtype=tf.float32)

mean_axis_1 = tf.reduce_mean(y, axis=1)

mean_total = tf.reduce_mean(y)

print_bold('\nCompute the mean of elements along a specified axis or across the entire tensor:')

print(mean_axis_1)

print(mean_total)

Compute the sum of elements along a specified axis or across the entire tensor:

tf.Tensor([5 7 9], shape=(3,), dtype=int32)

tf.Tensor(21, shape=(), dtype=int32)

Compute the mean of elements along a specified axis or across the entire tensor:

tf.Tensor([2. 5.], shape=(2,), dtype=float32)

tf.Tensor(3.5, shape=(), dtype=float32)

Max and Min: These operations compute the maximum or the minimum of elements along a specified axis or across the entire tensor. They can be useful for finding the largest or the smallest values of data.

Example:

tf.reduce_maxcomputes the maximum of elements along a specified axis or across the entire tensor.tf.reduce_mincomputes the minimum of elements along a specified axis or across the entire tensor.Code:

import tensorflow as tf

# Compute the maximum of elements along a specified axis or across the entire tensor

x = tf.constant([[1, 2, 3], [4, 5, 6]])

max_axis_1 = tf.reduce_max(x, axis=1)

max_total = tf.reduce_max(x)

print_bold('Compute the maximum of elements along a specified axis or across the entire tensor:')

print(max_axis_1)

print(max_total)

# Compute the minimum of elements along a specified axis or across the entire tensor

y = tf.constant([[1, 2, 3], [4, 5, 6]])

min_axis_0 = tf.reduce_min(y, axis=0)

min_total = tf.reduce_min(y)

print_bold('\nCompute the minimum of elements along a specified axis or across the entire tensor:')

print(min_axis_0)

print(min_total)

Compute the maximum of elements along a specified axis or across the entire tensor:

tf.Tensor([3 6], shape=(2,), dtype=int32)

tf.Tensor(6, shape=(), dtype=int32)

Compute the minimum of elements along a specified axis or across the entire tensor:

tf.Tensor([1 2 3], shape=(3,), dtype=int32)

tf.Tensor(1, shape=(), dtype=int32)

All and Any: These operations compute the logical AND or the logical OR of elements along a specified axis or across the entire tensor. They can be useful for checking the truth value of data.

Example:

tf.reduce_allcomputes the logical AND of elements along a specified axis or across the entire tensor.tf.reduce_anycomputes the logical OR of elements along a specified axis or across the entire tensor.Code:

import tensorflow as tf

# Compute the logical AND of elements along a specified axis or across the entire tensor

x = tf.constant([[True, False, True], [False, False, True]])

logical_and_axis_1 = tf.reduce_all(x, axis=1)

logical_and_total = tf.reduce_all(x)

print_bold('Compute the logical AND of elements along a specified axis or across the entire tensor:')

print(logical_and_axis_1)

print(logical_and_total)

# Compute the logical OR of elements along a specified axis or across the entire tensor

y = tf.constant([[True, False, True], [False, False, True]])

logical_or_axis_0 = tf.reduce_any(y, axis=0)

logical_or_total = tf.reduce_any(y)

print_bold('\nCompute the logical OR of elements along a specified axis or across the entire tensor:')

print(logical_or_axis_0)

print(logical_or_total)

Compute the logical AND of elements along a specified axis or across the entire tensor:

tf.Tensor([False False], shape=(2,), dtype=bool)

tf.Tensor(False, shape=(), dtype=bool)

Compute the logical OR of elements along a specified axis or across the entire tensor:

tf.Tensor([ True False True], shape=(3,), dtype=bool)

tf.Tensor(True, shape=(), dtype=bool)

These are some of the reduction operations available in TensorFlow, which can be used for different purposes and applications involving data aggregation and analysis. For more information, please refer to the TensorFlow documentation.

12.5.6. Sparse Operations#

12.5.6.1. Sparse Tensors#

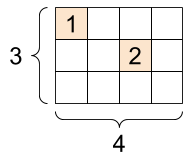

In certain scenarios, your data might exhibit sparsity, such as in a wide embedding space. TensorFlow provides support for handling sparse data through the use of tf.sparse.SparseTensor and associated operations. These tools allow you to efficiently store and manipulate sparse data within your TensorFlow computations [TensorFlow Developers, 2023].

Fig. 12.16 A tf.SparseTensor, shape: [3, 4]. Image courtesy of TensorFlow documentation [TensorFlow Developers, 2023].#

import tensorflow as tf

# Create a sparse tensor storing values by index

sparse_tensor = tf.sparse.SparseTensor(indices=[[0, 0], [1, 2]],

values=[1, 2],

dense_shape=[3, 4])

# Print the sparse tensor

print_bold("Sparse Tensor:")

print(sparse_tensor, "\n")

# Convert sparse tensor to dense

dense_tensor = tf.sparse.to_dense(sparse_tensor)

# Print the dense tensor

print_bold("Dense Tensor:")

print(dense_tensor)

Sparse Tensor:

SparseTensor(indices=tf.Tensor(

[[0 0]

[1 2]], shape=(2, 2), dtype=int64), values=tf.Tensor([1 2], shape=(2,), dtype=int32), dense_shape=tf.Tensor([3 4], shape=(2,), dtype=int64))

Dense Tensor:

tf.Tensor(

[[1 0 0 0]

[0 0 2 0]

[0 0 0 0]], shape=(3, 4), dtype=int32)

In this code, we’re showcasing the creation of a sparse tensor using tf.sparse.SparseTensor and the subsequent conversion of that sparse tensor to a dense tensor using tf.sparse.to_dense.

import tensorflow as tf

# Create a higher-dimensional sparse tensor

sparse_tensor = tf.sparse.SparseTensor(indices=[[0, 0, 0], [1, 2, 3], [2, 1, 2]],

values=[1, 2, 3],

dense_shape=[3, 4, 5])

# Print the sparse tensor

print_bold("Sparse Tensor:")

print(sparse_tensor, "\n")

# Convert sparse tensor to dense

dense_tensor = tf.sparse.to_dense(sparse_tensor)

# Print the dense tensor

print_bold("Dense Tensor:")

print(dense_tensor)

Sparse Tensor:

SparseTensor(indices=tf.Tensor(

[[0 0 0]

[1 2 3]

[2 1 2]], shape=(3, 3), dtype=int64), values=tf.Tensor([1 2 3], shape=(3,), dtype=int32), dense_shape=tf.Tensor([3 4 5], shape=(3,), dtype=int64))

Dense Tensor:

tf.Tensor(

[[[1 0 0 0 0]

[0 0 0 0 0]

[0 0 0 0 0]

[0 0 0 0 0]]

[[0 0 0 0 0]

[0 0 0 0 0]

[0 0 0 2 0]

[0 0 0 0 0]]

[[0 0 0 0 0]

[0 0 3 0 0]

[0 0 0 0 0]

[0 0 0 0 0]]], shape=(3, 4, 5), dtype=int32)

In this example, we’re creating a sparse tensor with a shape of [3, 4, 5].

12.5.6.2. Sparse Operations#

Sparse operations are a subset of TensorFlow operations that are designed for sparse tensors, which store tensors with many zeros efficiently by only keeping the non-zero values and their indices. Sparse operations can be useful for various purposes and applications involving sparse data and computation.

Some of the common sparse operations are [TensorFlow Developers, 2023]:

Creation and Conversion: These operations create or convert sparse tensors from or to other formats, such as dense tensors, coordinate lists, or index-value maps.

Example:

tf.sparse.from_densecreates a sparse tensor from a dense tensor.tf.sparse.to_denseconverts a sparse tensor to a dense tensor.tf.sparse.from_value_indexcreates a sparse tensor from a value-index map.Code:

import tensorflow as tf

# Create a sparse tensor from a dense tensor

x = tf.constant([[0, 0, 1], [2, 0, 0], [0, 3, 0]])

sparse_tensor_from_dense = tf.sparse.from_dense(x)

print_bold('Create a sparse tensor from a dense tensor:')

print(sparse_tensor_from_dense)

# Convert a sparse tensor to a dense tensor

y = tf.SparseTensor(indices=[[0, 2], [1, 0], [2, 1]], values=[1, 2, 3], dense_shape=[3, 3])

dense_tensor_from_sparse = tf.sparse.to_dense(y)

print_bold('\nConvert a sparse tensor to a dense tensor:')

print(dense_tensor_from_sparse)

# Create a sparse tensor from a value-index map

z_indices = tf.constant([[0], [2], [4]], dtype=tf.int64)

z_values = tf.constant([10, 20, 30], dtype=tf.int32)

sparse_tensor_from_map = tf.sparse.SparseTensor(indices=z_indices, values=z_values, dense_shape=[5])

print_bold('\nCreate a sparse tensor from a value-index map:')

print(sparse_tensor_from_map)

Create a sparse tensor from a dense tensor:

SparseTensor(indices=tf.Tensor(

[[0 2]

[1 0]

[2 1]], shape=(3, 2), dtype=int64), values=tf.Tensor([1 2 3], shape=(3,), dtype=int32), dense_shape=tf.Tensor([3 3], shape=(2,), dtype=int64))

Convert a sparse tensor to a dense tensor:

tf.Tensor(

[[0 0 1]

[2 0 0]

[0 3 0]], shape=(3, 3), dtype=int32)

Create a sparse tensor from a value-index map:

SparseTensor(indices=tf.Tensor(

[[0]

[2]

[4]], shape=(3, 1), dtype=int64), values=tf.Tensor([10 20 30], shape=(3,), dtype=int32), dense_shape=tf.Tensor([5], shape=(1,), dtype=int64))

Reduction and Aggregation: These operations reduce or aggregate sparse tensors along specified dimensions, by applying functions such as sum, mean, max, min, etc. They can be useful for data analysis and compression.

Example:

tf.sparse.reduce_sumcomputes the sum of elements along a specified axis or across the entire sparse tensor.tf.sparse.reduce_maxcomputes the maximum of elements along a specified axis or across the entire sparse tensor.Code:

import tensorflow as tf

# Compute the sum of elements along a specified axis or across the entire sparse tensor

x = tf.SparseTensor(indices=[[0, 0], [1, 2], [2, 3]], values=[1, 2, 3], dense_shape=[3, 4])

sum_axis_1 = tf.sparse.reduce_sum(x, axis=1)

sum_total = tf.sparse.reduce_sum(x)

print_bold('Compute the sum of elements along a specified axis or across the entire sparse tensor:')

print(sum_axis_1)

print(sum_total)

# Compute the maximum of elements along a specified axis or across the entire sparse tensor

y = tf.SparseTensor(indices=[[0, 0], [1, 2], [2, 3]], values=[1, 2, 3], dense_shape=[3, 4])

max_axis_0 = tf.sparse.reduce_max(y, axis=0)

max_total = tf.sparse.reduce_max(y)

print_bold('\nCompute the maximum of elements along a specified axis or across the entire sparse tensor:')

print(max_axis_0)

print(max_total)

Compute the sum of elements along a specified axis or across the entire sparse tensor:

tf.Tensor([1 2 3], shape=(3,), dtype=int32)

tf.Tensor(6, shape=(), dtype=int32)

Compute the maximum of elements along a specified axis or across the entire sparse tensor:

tf.Tensor([1 0 2 3], shape=(4,), dtype=int32)

tf.Tensor(3, shape=(), dtype=int32)

Manipulation and Transformation: These operations change the shape, size, order, and content of sparse tensors in various ways, such as reshaping, slicing, reordering, filling, and masking.

Example:

tf.sparse.reshapechanges the shape of a sparse tensor to a specified shape.tf.sparse.sliceextracts a slice of a sparse tensor based on a starting position and a size.tf.sparse.fill_empty_rowsfills the empty rows of a sparse tensor with a default value.Code:

import tensorflow as tf

# Change the shape of a sparse tensor to a specified shape

x = tf.SparseTensor(indices=[[0, 0], [1, 2], [2, 3]], values=[1, 2, 3], dense_shape=[3, 4])

reshaped_sparse_tensor = tf.sparse.reshape(x, shape=[2, 6])

print_bold('Change the shape of a sparse tensor to a specified shape:')

print(reshaped_sparse_tensor)

# Extract a slice of a sparse tensor based on a starting position and a size

y = tf.SparseTensor(indices=[[0, 0], [1, 2], [2, 3]], values=[1, 2, 3], dense_shape=[3, 4])

sliced_sparse_tensor = tf.sparse.slice(y, start=[0, 1], size=[2, 2])

print_bold('\nExtract a slice of a sparse tensor based on a starting position and a size:')

print(sliced_sparse_tensor)

# Fill the empty rows of a sparse tensor with a default value

z = tf.SparseTensor(indices=[[0, 0], [1, 2], [2, 3]], values=[1, 2, 3], dense_shape=[3, 4])

filled_sparse_tensor, non_empty_rows = tf.sparse.fill_empty_rows(z, default_value=0)

print_bold('\nFill the empty rows of a sparse tensor with a default value:')

print(filled_sparse_tensor)

print(non_empty_rows)

Change the shape of a sparse tensor to a specified shape:

SparseTensor(indices=tf.Tensor(

[[0 0]

[1 0]

[1 5]], shape=(3, 2), dtype=int64), values=tf.Tensor([1 2 3], shape=(3,), dtype=int32), dense_shape=tf.Tensor([2 6], shape=(2,), dtype=int64))

Extract a slice of a sparse tensor based on a starting position and a size:

SparseTensor(indices=tf.Tensor([[1 1]], shape=(1, 2), dtype=int64), values=tf.Tensor([2], shape=(1,), dtype=int32), dense_shape=tf.Tensor([2 2], shape=(2,), dtype=int64))

Fill the empty rows of a sparse tensor with a default value:

SparseTensor(indices=tf.Tensor(

[[0 0]

[1 2]

[2 3]], shape=(3, 2), dtype=int64), values=tf.Tensor([1 2 3], shape=(3,), dtype=int32), dense_shape=tf.Tensor([3 4], shape=(2,), dtype=int64))

tf.Tensor([False False False], shape=(3,), dtype=bool)

These are some of the sparse operations available in TensorFlow, which can be used for different purposes and applications involving sparse data and computation. For more information, please refer to the TensorFlow documentation.

12.5.7. Neural Network Operations#

Neural network operations are a subset of TensorFlow operations that are specifically tailored for building and training neural networks, and include activation functions, loss functions, optimizers, and layers. Neural network operations can be useful for various purposes and applications involving machine learning and deep learning.

Some of the common neural network operations are [TensorFlow Developers, 2023]:

Activation Functions: These operations apply non-linear functions to tensors, such as sigmoid, softmax, relu, tanh, etc. They can be useful for introducing non-linearity and complexity to neural networks, as well as for normalizing or scaling outputs.

Example:

tf.nn.sigmoidapplies the sigmoid function to a tensor, which maps each element to a value between 0 and 1.tf.nn.softmaxapplies the softmax function to a tensor, which normalizes each element to a probability distribution across the last dimension.Code:

import tensorflow as tf

# Apply the sigmoid function to a tensor

x = tf.constant([-1, 0, 1], dtype=tf.float32)

sigmoid_result = tf.nn.sigmoid(x)

print_bold('Apply the sigmoid function to a tensor:')

print(sigmoid_result)

# Apply the softmax function to a tensor

y = tf.constant([[1, 2, 3], [4, 5, 6]], dtype=tf.float32)

softmax_result = tf.nn.softmax(y)

print_bold('\nApply the softmax function to a tensor:')

print(softmax_result)

Apply the sigmoid function to a tensor:

tf.Tensor([0.26894143 0.5 0.7310586 ], shape=(3,), dtype=float32)

Apply the softmax function to a tensor:

tf.Tensor(

[[0.09003057 0.24472848 0.66524094]

[0.09003057 0.24472848 0.66524094]], shape=(2, 3), dtype=float32)

Loss Functions: These operations compute the loss or error between the predicted outputs and the actual outputs of a neural network, such as mean squared error, cross entropy, hinge loss, etc. They can be useful for measuring the performance and accuracy of neural networks, as well as for guiding the optimization process.

Example:

tf.keras.losses.MeanSquaredErrorcomputes the mean squared error between the predicted outputs and the actual outputs of a neural network.tf.keras.losses.BinaryCrossentropycomputes the binary cross entropy between the predicted outputs and the actual outputs of a neural network.Code:

import tensorflow as tf

# Compute the mean squared error between the predicted outputs and the actual outputs of a neural network

y_true_mse = tf.constant([1, 2, 3], dtype=tf.float32)

y_pred_mse = tf.constant([2, 3, 4], dtype=tf.float32)

mse = tf.keras.losses.MeanSquaredError()

mse_result = mse(y_true_mse, y_pred_mse)

print_bold('Compute the mean squared error between the predicted outputs and the actual outputs of a neural network:')

print(mse_result)

# Compute the binary cross entropy between the predicted outputs and the actual outputs of a neural network

y_true_bce = tf.constant([0, 1, 0], dtype=tf.float32)

y_pred_bce = tf.constant([0.1, 0.9, 0.2], dtype=tf.float32)

bce = tf.keras.losses.BinaryCrossentropy()

bce_result = bce(y_true_bce, y_pred_bce)

print_bold('\nCompute the binary cross entropy between the predicted outputs and the actual outputs of a neural network:')

print(bce_result)

Compute the mean squared error between the predicted outputs and the actual outputs of a neural network:

tf.Tensor(1.0, shape=(), dtype=float32)

Compute the binary cross entropy between the predicted outputs and the actual outputs of a neural network:

tf.Tensor(0.1446214, shape=(), dtype=float32)

Optimizers: These operations update the parameters of a neural network based on the gradients of the loss function, using various optimization algorithms, such as gradient descent, Adam, RMSProp, etc. They can be useful for improving the performance and accuracy of neural networks, by minimizing the loss function.

Example:

tf.keras.optimizers.SGDupdates the parameters of a neural network using stochastic gradient descent.tf.keras.optimizers.Adamupdates the parameters of a neural network using Adam, which is an adaptive learning rate optimization algorithm.Code:

import tensorflow as tf

# Update the parameters of a neural network using stochastic gradient descent

model_sgd = tf.keras.models.Sequential([tf.keras.layers.Dense(1)])

sgd = tf.keras.optimizers.SGD(learning_rate=0.01)

x_sgd = tf.constant([[1.], [2.], [3.]], dtype=tf.float32) # Adjust input shape

y_true_sgd = tf.constant([[4.], [5.], [6.]], dtype=tf.float32) # Adjust target shape

with tf.GradientTape() as tape_sgd:

predictions_sgd = model_sgd(x_sgd)

loss_sgd = tf.reduce_mean(tf.square(predictions_sgd - y_true_sgd))

grads_sgd = tape_sgd.gradient(loss_sgd, model_sgd.trainable_variables)

sgd.apply_gradients(zip(grads_sgd, model_sgd.trainable_variables))

# Update the parameters of a neural network using Adam

model_adam = tf.keras.models.Sequential([tf.keras.layers.Dense(1)])

adam = tf.keras.optimizers.Adam(learning_rate=0.01)

x_adam = tf.constant([[1.], [2.], [3.]], dtype=tf.float32) # Adjust input shape

y_true_adam = tf.constant([[4.], [5.], [6.]], dtype=tf.float32) # Adjust target shape

with tf.GradientTape() as tape_adam:

predictions_adam = model_adam(x_adam)

loss_adam = tf.reduce_mean(tf.square(predictions_adam - y_true_adam))

grads_adam = tape_adam.gradient(loss_adam, model_adam.trainable_variables)

adam.apply_gradients(zip(grads_adam, model_adam.trainable_variables))

<tf.Variable 'UnreadVariable' shape=() dtype=int64, numpy=1>

Layers: These operations create and apply layers to tensors, which are the basic building blocks of neural networks. Layers can perform various functions, such as linear transformation, convolution, pooling, dropout, batch normalization, etc. They can be useful for defining the structure and behavior of neural networks, as well as for adding complexity and functionality to them.

Example:

tf.keras.layers.Densecreates and applies a densely connected layer to a tensor, which performs a linear transformation with a specified number of units and an optional activation function.tf.keras.layers.Conv2Dcreates and applies a convolutional layer to a tensor, which performs a convolution operation with a specified number of filters and an optional activation function.Code:

import tensorflow as tf

# Create and apply a densely connected layer to a tensor

x_dense = tf.constant([[1, 2, 3], [4, 5, 6]], dtype=tf.float32) # Adjust input type

dense = tf.keras.layers.Dense(units=2, activation=tf.nn.relu)

output_dense = dense(x_dense)

print_bold('Densely connected layer output:')

print(output_dense.numpy())

# Create and apply a convolutional layer to a tensor

y_conv = tf.constant([[[[1.], [2.], [3.]], [[4.], [5.], [6.]], [[7.], [8.], [9.]]]], dtype=tf.float32) # Adjust input type

conv = tf.keras.layers.Conv2D(filters=1, kernel_size=2, activation=tf.nn.relu)

output_conv = conv(y_conv)

print_bold('\nConvolutional layer output:')

print(output_conv.numpy())

Densely connected layer output:

[[0. 0.]

[0. 0.]]

Convolutional layer output:

[[[[0.]

[0.]]

[[0.]

[0.]]]]

These are some of the neural network operations available in TensorFlow, which can be used for different purposes and applications involving machine learning and deep learning. For more information, please refer to the TensorFlow documentation.

12.5.8. Variable Operations#

Variable operations are a subset of TensorFlow operations that deal with the creation, modification, and management of variables, which are mutable tensors that can store and update values. Variable operations can be useful for various purposes and applications involving parameter optimization and stateful computation.

Some of the common variable operations are [TensorFlow Developers, 2023]:

Creation and Initialization: These operations create and initialize variables with specified values, shapes, and data types. They can be useful for defining and allocating variables for neural networks or other models.

Example:

tf.Variablecreates a variable with an initial value, shape, and data type.tf.zeroscreates a variable filled with zeros of a specified shape and data type.tf.random.normalcreates a variable with random values from a normal distribution of a specified shape and data type.Code:

# Create a variable with an initial value, shape, and data type

x = tf.Variable(1, shape=(), dtype=tf.int32)

print_bold("x:")

print(x)

# Create a variable filled with zeros of a specified shape and data type

y = tf.zeros(shape=(2, 2), dtype=tf.float32)

print_bold("y:")

print(y)

# Create a variable with random values from a normal distribution of a specified shape and data type

z = tf.random.normal(shape=(3, 3), dtype=tf.float32)

print_bold("z:")

print(z)

x:

<tf.Variable 'Variable:0' shape=() dtype=int32, numpy=1>

y:

tf.Tensor(

[[0. 0.]

[0. 0.]], shape=(2, 2), dtype=float32)

z:

tf.Tensor(

[[-0.24941136 1.8433316 -0.5462926 ]

[-1.6385643 0.15836732 -0.8668773 ]

[ 0.6604828 1.0676547 -0.20584674]], shape=(3, 3), dtype=float32)

Modification and Assignment: These operations modify and assign new values to variables, either directly or by applying functions or operations. They can be useful for updating and changing variables during training or inference.

Example:

tf.assignassigns a new value to a variable.tf.assign_addandtf.assign_subassign the result of adding or subtracting a value to a variable, respectively.tf.scatter_updateupdates a variable by scattering a value into specified indices.Code:

# Assign a new value to a variable

x = tf.Variable(1, shape=(), dtype=tf.int32)

x.assign(2)

print_bold("x:")

print(x)

# Assign the result of adding or subtracting a value to a variable

y = tf.Variable(1, shape=(), dtype=tf.int32)

y.assign_add(2)

print_bold("y (after adding):")

print(y)

y.assign_sub(1)

print_bold("y (after subtracting):")

print(y)

# Update a variable by scattering a value into specified indices

z = tf.Variable([1, 2, 3], shape=(3,), dtype=tf.int32)

indices = [1, 2]

updates = [4, 5]

sparse_delta = tf.IndexedSlices(values=updates, indices=indices)

z.scatter_update(sparse_delta)

print_bold("z (after scattering):")

print(z)

x:

<tf.Variable 'Variable:0' shape=() dtype=int32, numpy=2>

y (after adding):

<tf.Variable 'Variable:0' shape=() dtype=int32, numpy=3>

y (after subtracting):

<tf.Variable 'Variable:0' shape=() dtype=int32, numpy=2>

z (after scattering):

<tf.Variable 'Variable:0' shape=(3,) dtype=int32, numpy=array([1, 4, 5])>

Optimization and Gradient Descent: These operations optimize the parameters of a neural network or other model based on the gradients of the loss function, using various optimization algorithms, such as gradient descent, Adam, RMSProp, etc. They can be useful for improving the performance and accuracy of models, by minimizing the loss function.

Example:

tf.train.Optimizeris an abstract class that provides common methods and attributes for optimizers.tf.train.GradientDescentOptimizeris a subclass oftf.train.Optimizerthat implements the gradient descent algorithm.tf.train.AdamOptimizeris a subclass oftf.train.Optimizerthat implements the Adam algorithm, which is an adaptive learning rate optimization algorithm.Code:

# Create an optimizer that implements the gradient descent algorithm

optimizer_sgd = tf.optimizers.SGD(learning_rate=0.01)

# Create an optimizer that implements the Adam algorithm

optimizer_adam = tf.optimizers.Adam(learning_rate=0.01)

# Update the parameters of a model based on the gradients of the loss function using SGD

model_sgd = tf.keras.models.Sequential([tf.keras.layers.Dense(1)])

with tf.GradientTape() as tape_sgd:

loss_sgd = tf.reduce_mean(tf.square(model_sgd(x_sgd) - y_true_sgd))

grads_sgd = tape_sgd.gradient(loss_sgd, model_sgd.trainable_variables)

optimizer_sgd.apply_gradients(zip(grads_sgd, model_sgd.trainable_variables))

# Update the parameters of a model based on the gradients of the loss function using Adam

model_adam = tf.keras.models.Sequential([tf.keras.layers.Dense(1)])

with tf.GradientTape() as tape_adam:

loss_adam = tf.reduce_mean(tf.square(model_adam(x_adam) - y_true_adam))

grads_adam = tape_adam.gradient(loss_adam, model_adam.trainable_variables)

optimizer_adam.apply_gradients(zip(grads_adam, model_adam.trainable_variables))

# Print model summary for verification

print_bold("Model Summary (SGD):")

model_sgd.summary()

print_bold("\nModel Summary (Adam):")

model_adam.summary()

Model Summary (SGD):

Model: "sequential_13"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_18 (Dense) (3, 1) 2

=================================================================

Total params: 2 (8.00 Byte)

Trainable params: 2 (8.00 Byte)

Non-trainable params: 0 (0.00 Byte)

_________________________________________________________________

Model Summary (Adam):

Model: "sequential_14"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_19 (Dense) (3, 1) 2

=================================================================

Total params: 2 (8.00 Byte)

Trainable params: 2 (8.00 Byte)

Non-trainable params: 0 (0.00 Byte)

_________________________________________________________________

These are some of the variable operations available in TensorFlow, which can be used for different purposes and applications involving parameter optimization and stateful computation. For more information, please refer to the TensorFlow documentation.

12.5.9. I/O Operations#

I/O operations are a subset of TensorFlow operations that facilitate the reading and writing of data from various sources, such as files, queues, or networks. I/O operations can be useful for various purposes and applications involving data input and output.

Some of the common I/O operations are [TensorFlow Developers, 2023]:

Reading and Writing Files: These operations read and write data from or to files, such as text files, binary files, image files, audio files, etc. They can be useful for loading and saving data from or to local or remote storage.

Example:

tf.io.read_filereads the entire contents of a file as a string tensor.tf.io.decode_imagedecodes an image file into a uint8 tensor.tf.io.write_filewrites a string tensor to a file.Code:

# Read the entire contents of a file as a string tensor

filename_text = "test_files/test.txt"

content_text = tf.io.read_file(filename_text)

print_bold("File Content (Text):")

print(content_text)

# Decode an image file into a uint8 tensor

filename_image = "test_files/test.jpg"

image_data = tf.io.read_file(filename_image)

image = tf.io.decode_image(image_data)

print_bold("\nImage Tensor:")

print(image)

# Write a string tensor to a file

filename_output = "test_files/output.txt"

content_output = tf.constant("Hello Calgary")

tf.io.write_file(filename_output, content_output)

print_bold("\nFile 'output.txt' has been written.")

File Content (Text):

tf.Tensor(b'ENGG 680 - Fall 2023', shape=(), dtype=string)

Image Tensor:

tf.Tensor(

[[[255 255 255]

[255 255 255]

[255 255 255]

...

[255 255 255]

[255 255 255]

[255 255 255]]

[[255 255 255]

[255 255 255]

[255 255 255]

...

[255 255 255]

[255 255 255]

[255 255 255]]

[[255 255 255]

[255 255 255]

[255 255 255]

...

[255 255 255]

[255 255 255]

[255 255 255]]

...

[[255 255 255]

[255 255 255]

[255 255 255]

...

[255 255 255]

[255 255 255]

[255 255 255]]

[[255 255 255]

[255 255 255]

[255 255 255]

...

[255 255 255]

[255 255 255]

[255 255 255]]

[[255 255 255]

[255 255 255]

[255 255 255]

...

[255 255 255]

[255 255 255]

[255 255 255]]], shape=(100, 100, 3), dtype=uint8)

File 'output.txt' has been written.

Reading and Writing DataSets: These operations read and write data from or to data sets, which are collections of elements that can be iterated over. Data sets can be created from various sources, such as tensors, files, generators, etc. They can be useful for processing and transforming data in batches or streams.

Example:

tf.data.Dataset.from_tensor_slicescreates a data set from a tensor or a list of tensors.tf.data.Dataset.mapapplies a function to each element of a data set.tf.data.Dataset.batchcombines consecutive elements of a data set into batches.Code:

# Create a data set from a tensor or a list of tensors

x = tf.constant([1, 2, 3])

y = tf.constant([4, 5, 6])

dataset = tf.data.Dataset.from_tensor_slices((x, y))

print_bold("TensorSliceDataset:")

print(dataset)

# Apply a function to each element of a data set

def add_one(x, y):

return x + 1, y + 1

dataset_mapped = dataset.map(add_one)

print_bold("\nMapDataset:")

print(dataset_mapped)

# Combine consecutive elements of a data set into batches

dataset_batched = dataset_mapped.batch(2)

print_bold("\nBatchDataset:")

print(dataset_batched)

TensorSliceDataset:

<_TensorSliceDataset element_spec=(TensorSpec(shape=(), dtype=tf.int32, name=None), TensorSpec(shape=(), dtype=tf.int32, name=None))>

MapDataset:

<_MapDataset element_spec=(TensorSpec(shape=(), dtype=tf.int32, name=None), TensorSpec(shape=(), dtype=tf.int32, name=None))>

BatchDataset:

<_BatchDataset element_spec=(TensorSpec(shape=(None,), dtype=tf.int32, name=None), TensorSpec(shape=(None,), dtype=tf.int32, name=None))>

Reading and Writing TFRecords: These operations read and write data from or to TFRecords, which are binary files that store data as a sequence of protocol buffers. TFRecords can be useful for storing and loading large or complex data efficiently and reliably.

Example:

tf.io.TFRecordWritercreates a writer that writes data to a TFRecord file.tf.train.Examplecreates an example that stores data as a protocol buffer.tf.data.TFRecordDatasetcreates a data set that reads data from a TFRecord file.Code:

# Create a writer that writes data to a TFRecord file

filename = "test_files/test.tfrecord"

writer = tf.io.TFRecordWriter(filename)

print_bold("TFRecordWriter created for file:", filename)

# Create an example that stores data as a protocol buffer

x = tf.train.Feature(int64_list=tf.train.Int64List(value=[1]))

y = tf.train.Feature(int64_list=tf.train.Int64List(value=[4]))

example = tf.train.Example(features=tf.train.Features(feature={"x": x, "y": y}))

print_bold("\nExample:")

print(example)

# Write the example to the TFRecord file

writer.write(example.SerializeToString())

writer.close()

print_bold("\nExample written to TFRecord file:", filename)

# Create a data set that reads data from a TFRecord file

dataset = tf.data.TFRecordDataset(filename)

print_bold("\nTFRecordDataset created:")

print(dataset)

est_files/test.tfrecordmTFRecordWriter created for file:

Example:

features {

feature {

key: "x"

value {

int64_list {

value: 1

}

}

}

feature {

key: "y"

value {

int64_list {

value: 4

}

}

}

}

est_files/test.tfrecordm

Example written to TFRecord file:

TFRecordDataset created:

<TFRecordDatasetV2 element_spec=TensorSpec(shape=(), dtype=tf.string, name=None)>

These are some of the I/O operations available in TensorFlow, which can be used for different purposes and applications involving data input and output. For more information, please refer to the TensorFlow documentation.

12.5.10. Image and Signal Processing Operations#

Image and signal processing operations are a subset of TensorFlow operations that are specific to image and signal processing tasks, and include functions for resizing, cropping, filtering, and transforming images and signals. Image and signal processing operations can be useful for various purposes and applications involving image and signal analysis and synthesis.

Some of the common image and signal processing operations are [TensorFlow Developers, 2023]:

Resizing and Cropping: These operations change the size or the region of interest of images or signals, such as scaling, padding, centering, or cropping. They can be useful for adjusting the resolution or the aspect ratio of images or signals, or for extracting specific parts of them.

Example:

tf.image.resize: Resizes an image or a batch of images to a specified size using a chosen method.tf.image.crop_and_resize: Crops and resizes a batch of images using bounding boxes.tf.signal.dct: Resamples a signal or a batch of signals to a specified number of samples using a discrete cosine transform (DCT) method.Code:

# Resize an image or a batch of images to a specified size using a specified method

image = tf.constant([[[[1], [2], [3]], [[4], [5], [6]], [[7], [8], [9]]]], dtype=tf.float32)

resized_image = tf.image.resize(image, size=[2, 2], method=tf.image.ResizeMethod.BILINEAR)

print_bold("Resized Image:")

print(resized_image)

# Crop and resize a batch of images using bounding boxes

image = tf.constant([[[[1], [2], [3]], [[4], [5], [6]], [[7], [8], [9]]]], dtype=tf.float32)

boxes = tf.constant([[0, 0, 1, 1], [0.5, 0.5, 1, 1]])

cropped_resized_images = tf.image.crop_and_resize(image, boxes=boxes, box_indices=[0, 0], crop_size=[2, 2])

print_bold("\nCropped and Resized Images:")

print(cropped_resized_images)

# Resample a signal or a batch of signals to a specified number of samples using a specified method

signal = tf.constant([[1, 2, 3, 4, 5, 6, 7, 8]], dtype=tf.float32)

resampled_signal = tf.signal.dct(signal)

print_bold("\nResampled Signal:")

print(resampled_signal)

Resized Image:

tf.Tensor(

[[[[2. ]

[3.5]]

[[6.5]

[8. ]]]], shape=(1, 2, 2, 1), dtype=float32)

Cropped and Resized Images:

tf.Tensor(

[[[[1.]

[3.]]

[[7.]

[9.]]]

[[[5.]

[6.]]

[[8.]

[9.]]]], shape=(2, 2, 2, 1), dtype=float32)

Resampled Signal:

tf.Tensor(

[[ 7.2000000e+01 -2.5769291e+01 9.5367432e-07 -2.6938186e+00

-4.7683716e-07 -8.0361128e-01 -9.5367432e-07 -2.0280755e-01]], shape=(1, 8), dtype=float32)

Filtering and Convolution: These operations apply filters or convolution kernels to images or signals, such as smoothing, sharpening, edge detection, or feature extraction. They can be useful for enhancing, modifying, or analyzing images or signals, or for building convolutional neural networks.

Example:

tf.image.sobel_edgesapplies the Sobel filter to an image or a batch of images and returns the gradients along the x and y directions.tf.nn.conv2dapplies a 2-D convolution to an input tensor and a filter tensor and returns the output tensor.tf.signal.fftapplies the fast Fourier transform to a signal or a batch of signals and returns the complex spectrum.Code:

# Apply the Sobel filter to an image or a batch of images and return the gradients along the x and y directions

image = tf.constant([[[[1], [2], [3]], [[4], [5], [6]], [[7], [8], [9]]]], dtype=tf.float32)

sobel_gradients = tf.image.sobel_edges(image)

print_bold("Sobel Filter Gradients:")

print(sobel_gradients)

# Apply a 2-D convolution to an input tensor and a filter tensor and return the output tensor

input_tensor = tf.constant([[[[1], [2], [3]], [[4], [5], [6]], [[7], [8], [9]]]], dtype=tf.float32)

filter_tensor = tf.constant([[[[1]], [[1]]], [[[1]], [[1]]]], dtype=tf.float32)

convolution_result = tf.nn.conv2d(input_tensor, filter_tensor, strides=[1, 1, 1, 1], padding="VALID")

print_bold("\nConvolution Result:")

print(convolution_result)

# Apply the fast Fourier transform to a signal or a batch of signals and return the complex spectrum

signal = tf.constant([[1, 2, 3, 4]], dtype=tf.float32)

fft_result = tf.signal.fft(tf.cast(signal, dtype=tf.complex64))

print_bold("\nFast Fourier Transform Result:")

print(fft_result)

Sobel Filter Gradients:

tf.Tensor(

[[[[[ 0. 0.]]

[[ 0. 8.]]

[[ 0. 0.]]]

[[[24. 0.]]

[[24. 8.]]

[[24. 0.]]]

[[[ 0. 0.]]

[[ 0. 8.]]

[[ 0. 0.]]]]], shape=(1, 3, 3, 1, 2), dtype=float32)

Convolution Result:

tf.Tensor(

[[[[12.]

[16.]]

[[24.]

[28.]]]], shape=(1, 2, 2, 1), dtype=float32)

Fast Fourier Transform Result:

tf.Tensor([[10.+0.j -2.+2.j -2.+0.j -2.-2.j]], shape=(1, 4), dtype=complex64)

Transformation and Augmentation: These operations transform or augment images or signals, such as rotating, flipping, cropping, scaling, translating, or adding noise. They can be useful for changing the perspective or the appearance of images or signals, or for increasing the diversity and size of data sets.

Example:

tf.image.rot90rotates an image or a batch of images by 90 degrees.tf.image.random_flip_left_rightrandomly flips an image or a batch of images horizontally.tf.image.central_cropcrops the central region of an image or a batch of images to a specified fraction of the original size.Code:

# Rotate an image or a batch of images by 90 degrees

rotated_image = tf.image.rot90(image)

print_bold("Rotated Image:")

print(rotated_image)

# Randomly flip an image or a batch of images horizontally

flipped_image = tf.image.random_flip_left_right(image)

print_bold("\nFlipped Image:")

print(flipped_image)

# Crop the central region of an image or a batch of images to a specified fraction of the original size

cropped_image = tf.image.central_crop(image, central_fraction=0.5)

print_bold("\nCropped Image:")

print(cropped_image)

Rotated Image:

tf.Tensor(

[[[[3.]

[6.]

[9.]]

[[2.]

[5.]

[8.]]

[[1.]

[4.]

[7.]]]], shape=(1, 3, 3, 1), dtype=float32)

Flipped Image:

tf.Tensor(

[[[[3.]

[2.]

[1.]]

[[6.]

[5.]

[4.]]

[[9.]

[8.]

[7.]]]], shape=(1, 3, 3, 1), dtype=float32)

Cropped Image:

tf.Tensor(

[[[[1.]

[2.]

[3.]]

[[4.]

[5.]

[6.]]

[[7.]

[8.]

[9.]]]], shape=(1, 3, 3, 1), dtype=float32)

These are some of the image and signal processing operations available in TensorFlow, which can be used for different purposes and applications involving image and signal analysis and synthesis. For more information, please refer to the TensorFlow documentation.